TLDR: UMA Uses AI to Find Truth Onchain

UMA’s Optimistic Truth Bot (OTB) is an AI-powered agent that proposes real-world data to oracles accurately, transparently, and under human oversight. The OTB supports humans rather than replacing them, proving AI can help verify onchain truth at scale.

Introduction

AI is flooding the internet with misinformation. But what if the same tech could help verify the truth instead?

It sounds ironic. The tools responsible for automating the spread of half-truths and propaganda might also hold the key to fighting back. In a world increasingly shaped by algorithms, the challenge isn’t just about catching up to AI. It’s about using it with precision and integrity.

UMA’s Optimistic Truth Bot (OTB) is an example of what that could look like. It’s a system that uses AI not to replace humans, but to support them by proposing answers to real-world questions that UMA's decentralized community can then verify or dispute. It’s fast, scalable, and already showing strong performance.

But this didn’t happen overnight. And it didn’t happen without failure. UMA’s current AI agent is the result of experimentation, setbacks, and iteration. And its success is a case study in how far AI, and our understanding of how to use it responsibly, has come.

Here's the story.

UMA’s First AI Attempt: A Good Idea That Fell Short

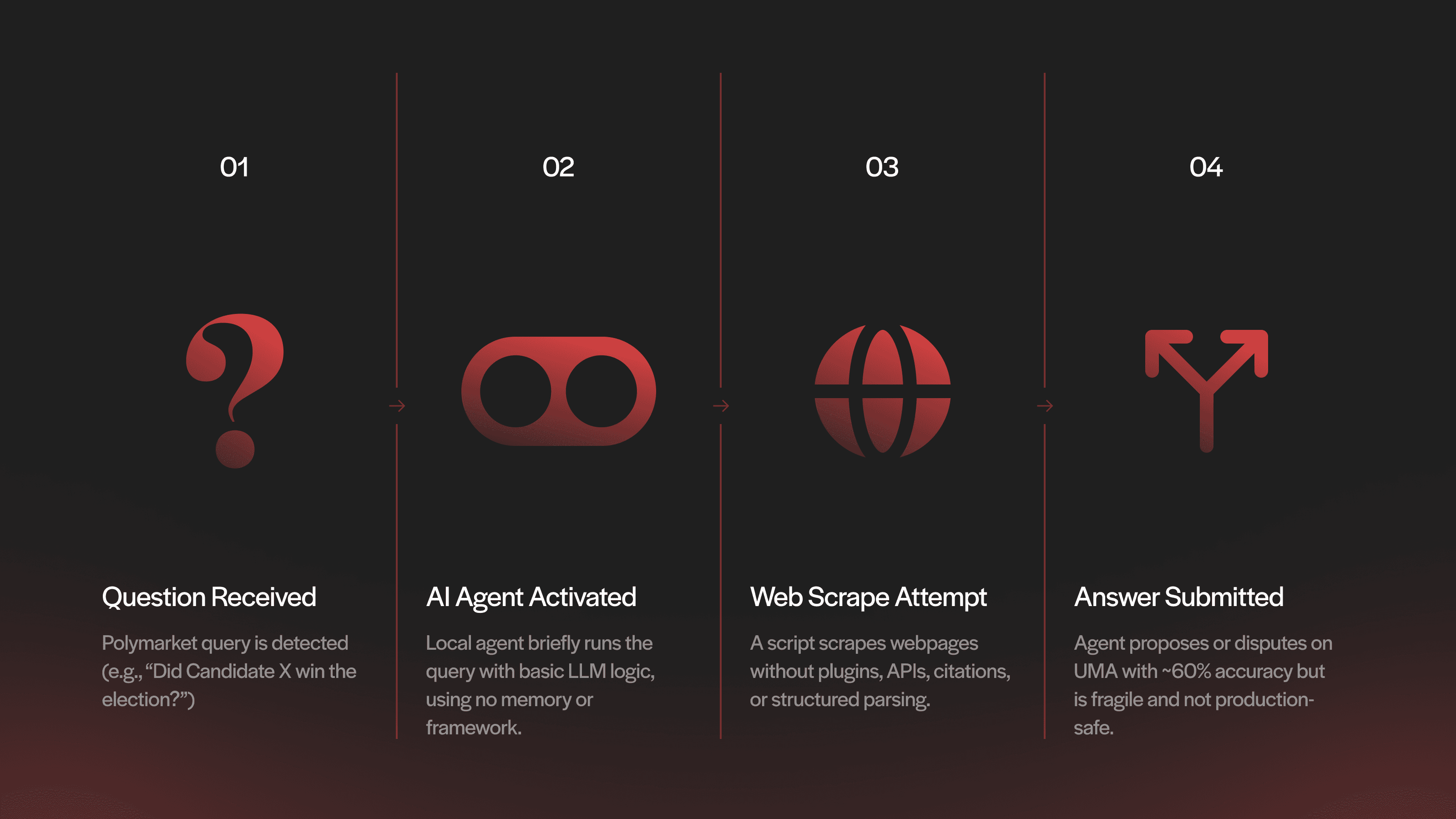

Roughly a year ago, UMA initiated an experiment: could an AI agent serve as a proposer in the optimistic oracle?

At the time, large language models like LLaMA 3.5 had gained attention, and developers were beginning to stitch together autonomous agents with basic research abilities. UMA worked with an external contributor who built a prototype agent that ran locally and attempted to scrape information from the web to propose answers and dispute resolutions for prediction markets on Polymarket.

On paper, it sounded promising. But in practice, the model struggled. The scraping system couldn’t consistently return accurate or verifiable data, the model’s reasoning often lacked clarity or rigor, and the entire setup was slow and difficult to iterate on. Most importantly, it only achieved about 60% accuracy, which was too low to trust with real onchain outcomes. And it showed little room for improvement, making it completely non-viable.

However, the experiment wasn’t a failure. It was an early field test. The insights were clear and foundational:

Data retrieval was the biggest blocker. LLMs at the time couldn’t reliably fetch up-to-date, verifiable information.

The models weren’t robust enough to consistently interpret or reason through the data they received.

A modular approach was needed. The original monolithic script was brittle and hard to scale, pointing toward a future composable design.

Performance was too slow. Running the bot locally made it inefficient for high-volume, onchain needs.

Luckily, the AI landscape evolved quickly.

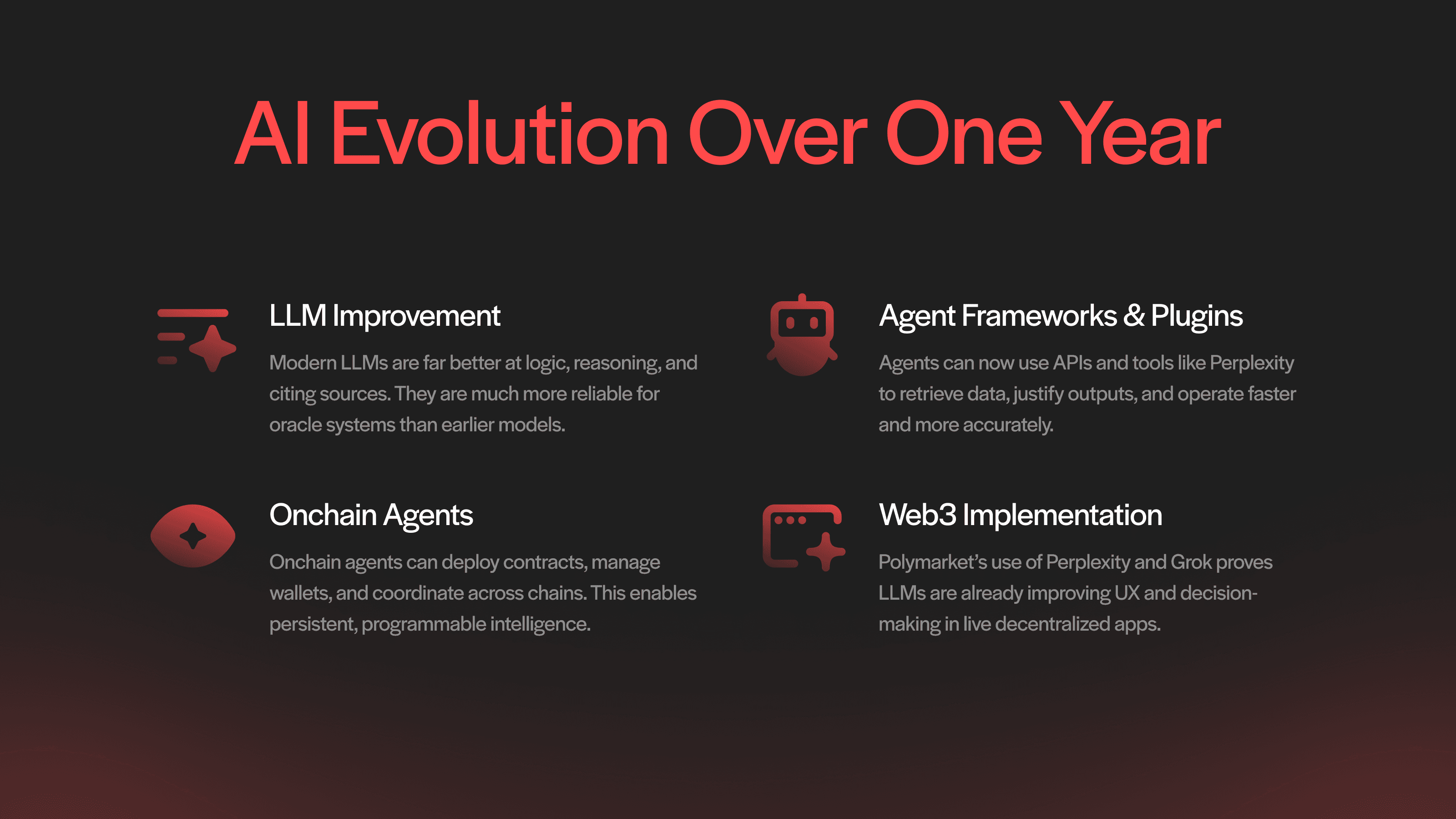

What Changed: AI Infrastructure and Capabilities Leveled Up

In the year since UMA’s first AI attempt, nearly every component of the AI stack has matured. From smarter LLMs to agent frameworks and modular web3 tools, this leap in infrastructure laid the foundation for more reliable, verifiable, and scalable truth systems.

Language Models: Smarter, Safer, and Source-Aware

Since our first attempt, the AI ecosystem has matured dramatically. LLMs like GPT-4, Claude 3, and LLaMA 4 are much better at language and have significantly improved in logic, multi-step reasoning, and fact-checking, which is critical for oracle systems.

Unlike earlier models, newer models can now trace public information sources more effectively, weigh contradictory data, and produce answers with citations. These abilities mirror the needs of the optimistic oracle, where each proposed data point must be verifiable, justifiable, and clear enough to pass public scrutiny. With these capabilities, LLMs are far more suited to serve as proposers in oracle ecosystems than they were even a year ago.

Agent Frameworks and Retrieval Tools: The Plugin Revolution

New agent frameworks began to emerge that allowed LLMs to use plugins, APIs, and retrieval tools like Perplexity to power their research with verifiable information. These tools extend the models’ capabilities and enable targeted fact-finding and logic processing, both of which are critical for oracle tasks.

This progress directly addresses UMA’s earlier obstacles:

Improved data retrieval: Retrieval-augmented generation and web-connected tools replace fragile scraping mechanisms.

Stronger reasoning: Agents now use multi-step logic chains and tool use to justify outputs.

Faster execution: Cloud-based orchestration replaces local-only compute for better speed and scale.

With these advances, agents can produce traceable, source-backed data, making them far better suited for oracle applications than before.

The Rise of Modular, Onchain AI Agents

The AI evolution is happening in web3 as well, and a new class of modular, onchain agents is reshaping what’s possible:

LLM agents today can interact with smart contracts, manage wallets, and execute workflows.

Spectral Syntax enables onchain agents and natural language to smart contract deployment.

ElizaOS and other platforms support modular agents for multi-platform coordination.

These capabilities unlock a key transition from static, one-off AI tools to programmable, persistent agents that can operate across blockchains. This opens up new possibilities for onchain arbitration, automation, and crosschain intelligence.

Real-World Signals: AI-Powered Insights

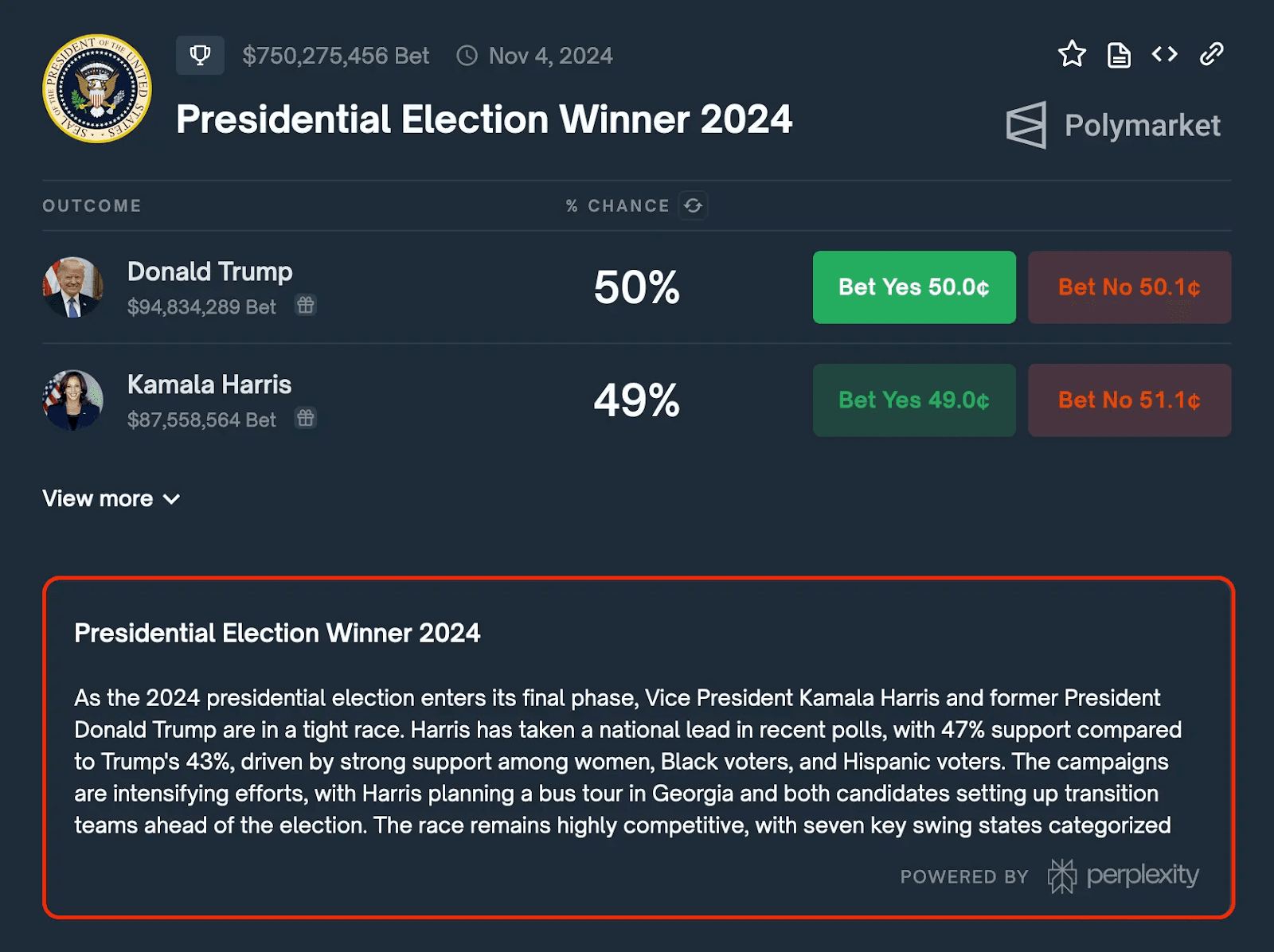

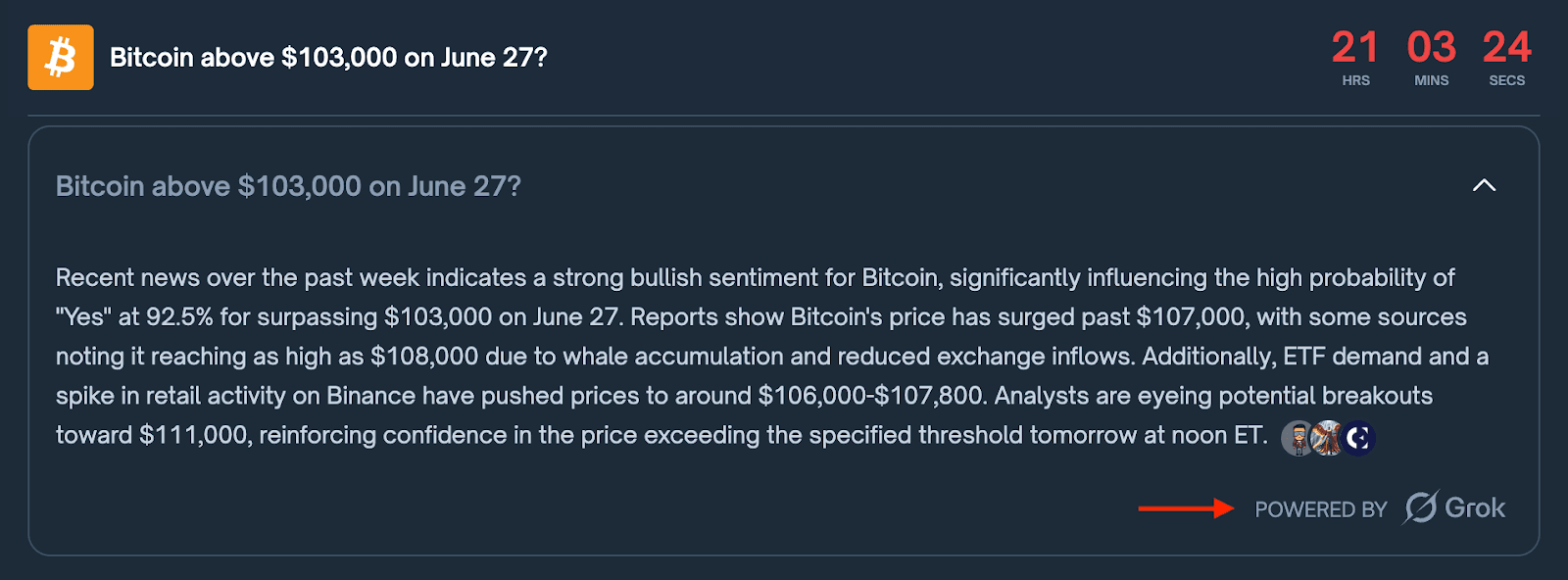

Another key development came from the broader web3 ecosystem. In August 2024, Polymarket integrated Perplexity AI to help users better interpret and navigate complex markets. This was a massive UX upgrade, proving that LLMs can be embedded into decision-making layers of decentralized applications.

Further fueling this momentum, xAI’s recent partnership with Polymarket introduced Grok-powered insights directly into market pages, making real-time AI assistance a native feature of the user experience.

These initiatives, combined with UMA’s design choice to use Perplexity in its own Optimistic Truth Bot (we'll dive into this deeper in a moment), reinforced a larger trend: LLMs are no longer hypothetical research assistants. They’re actively helping decentralized communities make sense of the world.

The Optimistic Truth Bot: UMA’s Modular Oracle Agent

After learning from our first attempt, accompanied by the industry-wide improvements in the LLM landscape, we built the Optimistic Truth Bot (OTB).

The OTB is a modular, agentic system designed to propose data to UMA’s optimistic oracle with high accuracy, clear reasoning, and full transparency. It doesn’t pretend to be perfect, and it is consistent, cautious, and well-aligned with UMA’s purpose: to verify real-world data onchain.

With that said, the OTB is still in its experimental phase and not actively proposing onchain. For now, it is running in parallel with the optimistic oracle, proposing resolutions to Polymarket prediction markets offchain.

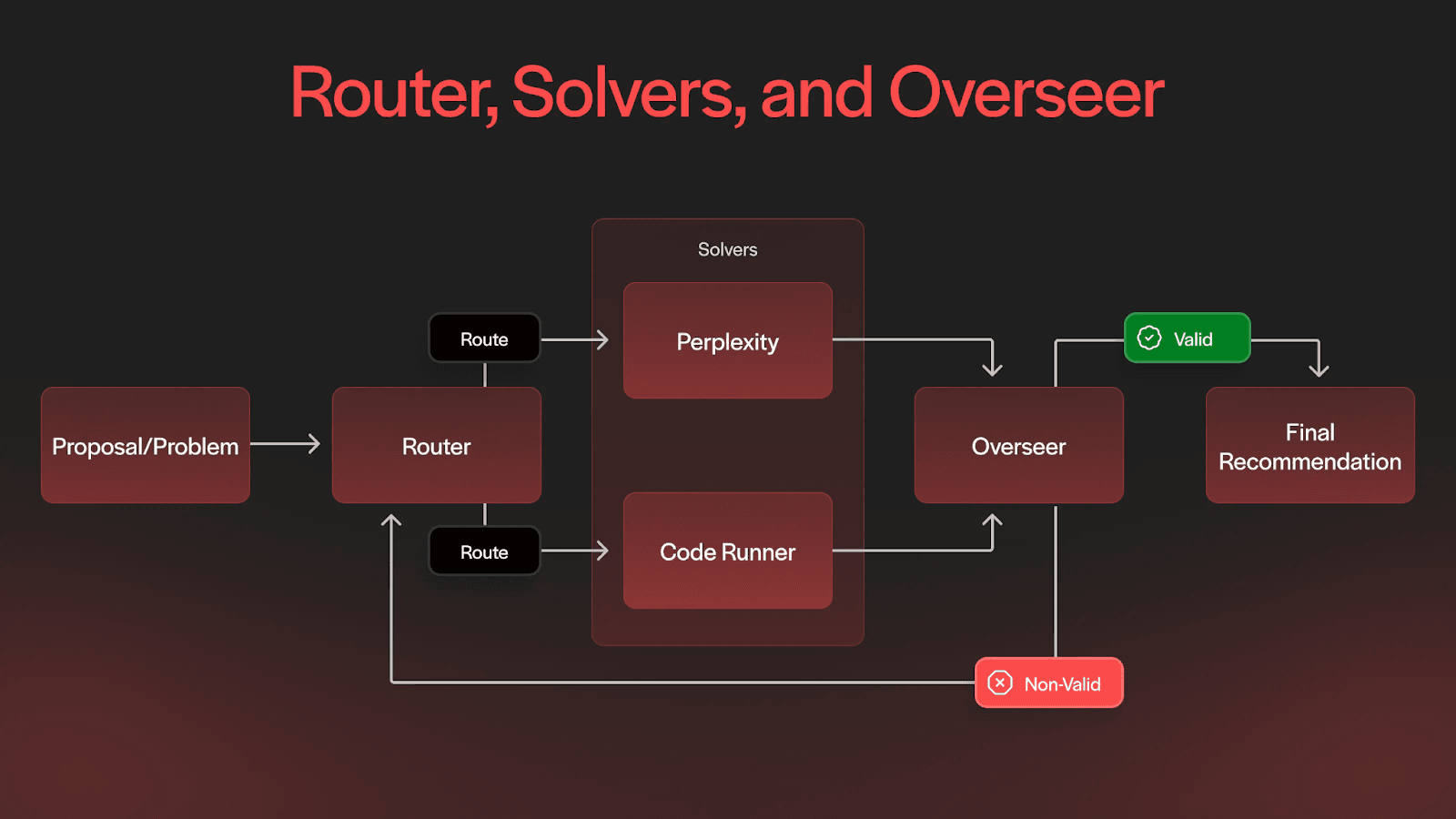

How the OTB Works

When a new query comes in (e.g., a prediction market asking whether a certain political candidate won an election), the OTB follows a multi-step process:

Routing: A Router module classifies the query and directs it to the appropriate Solver. For fact-based questions, such as sports results or election outcomes, it sends the task to a tool that uses Perplexity to search and synthesize reputable, real-time data. For computational or structured questions, it may use a code execution environment to generate precise outputs.

Solving: The Solver gathers relevant data and generates a proposed answer based on logic and facts.

Evaluation: The Overseer module then steps in. It evaluates the proposal for clarity, accuracy, and timing. If there's ambiguity or the data appears incomplete or premature, the Overseer can reject the proposal or request a retry.

This layered process ensures the bot doesn’t just generate answers, but rather filter, refine, and verify its outputs before they ever reach the oracle. It’s this internal skepticism that helps avoid the kinds of errors that have historically triggered disputes in UMA’s system.

Is the OTB Effective?

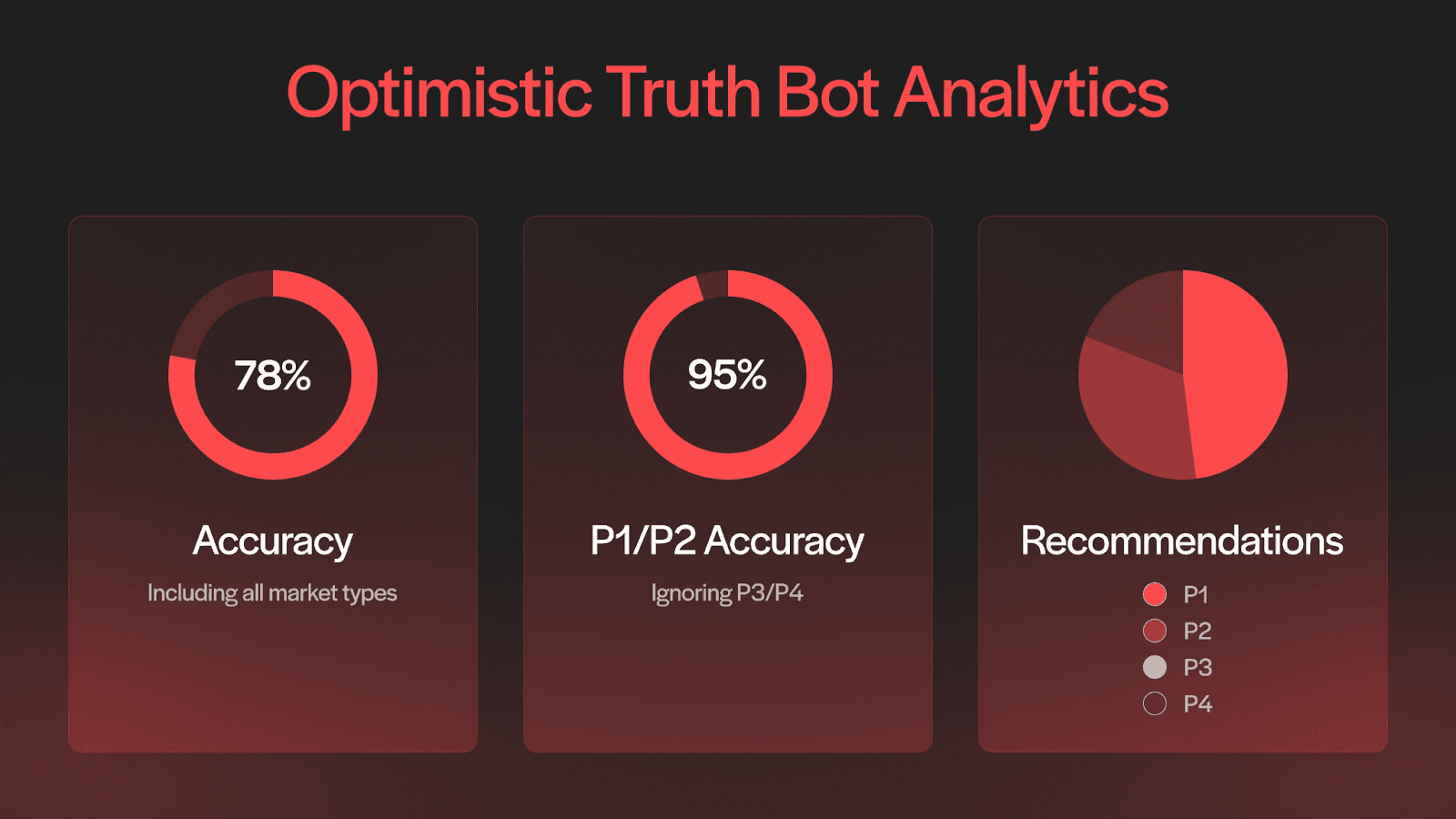

The results speak for themselves. In its current form, the OTB achieves ~95% accuracy when excluding early or ambiguous cases, with near-perfect performance on well-structured, objective questions. It is designed as a cautious system: the Overseer rejects proposals if the answer lacks sufficient evidence or confidence, and then signals the system to wait and retry later. This rigorous pipeline helps prevent false positives, enforcing a level of restraint that is rare in AI and vital for oracles.

However, the system is not perfect yet. When premature or low-confidence proposals are included, overall accuracy across all markets drops to ~78%, highlighting where further refinement is needed.

With this said, the OTB is continuously improving. As we work on updating its logic, and as the solvers continue to sharpen, the AI should only become more accurate.

The Bigger Question: How Should AI Contribute Towards Onchain Truth?

AI is already being used to assist decision-making and arbitration in web3. A few prominent examples:

Arbitrus.ai for smart contract dispute resolution.

Kleros Harmony for AI-powered mediation.

MakerDAO’s GAITs initiative, which explores governance support through LLM summarization and filtering.

These projects are exploring ways to let AI partially replace or augment human judgment. But UMA offers a different path.

At UMA, we don’t believe AI should be the final judge. That’s our job as humans. LLMs are excellent proposers who provide “first drafts” in a system built for public verification. However, UMA’s architecture is challenge-based and dispute-driven, meaning that any answer can be questioned and escalated. This ensures that the truth isn’t accepted until it’s tested and verified by human participants.

This difference is both technical and philosophical:

Deterministic LLM systems aim for speed, but their outputs can be hard to audit or challenge.

UMA’s AI implementation addresses scalability and prioritizes verifiability, even if it means slower throughput. Anyone can challenge a proposed answer, and the community decides what gets recorded onchain.

In an age of AI-generated noise, UMA’s model is a reminder: speed isn’t the same as truth. And when the stakes are high, trust comes from transparency.

Where It’s Going: A New Model for Onchain Truth

The current version of UMA’s OTB focuses on prediction markets, which are fast-moving, binary, and relatively easy to verify. But the implications are much broader.

In time, the system could be used to propose outcomes for governance proposals, DAO votes, or any offchain event that needs to be recorded onchain. It could handle regulatory events, financial benchmarks, or even long-tail claims about real-world actions. Because of its modular design, the OTB could evolve into a network of domain-specific agents, each trained or fine-tuned to handle specific types of questions with expert precision (e.g., one for election results, one for financial benchmarks, another for governance outcomes, etc.).

As it matures, the OTB may begin proposing directly onchain, handling a growing share of oracle volume in a more scalable way. But as of right now, it simply provides offchain suggestions and will always operate within the optimistic oracle’s core architecture: open, challengeable, and economically secure.

At the end of the day, AI-systems should never replace human-based decision making. The true opportunity lies in our ability to leverage the power of AI to augment our current systems, while maintaining a human-based settlement layer as a backstop safety net. In other words, the goal is a hybrid model where AI proposes and humans decide.

This is the core AI philosophy that we’ve chosen. And it is exactly what we’re building.

Open Questions We’re Still Exploring

Even with this progress, the work is far from done. Some key questions remain:

How do we incentivize communities to challenge AI proposals when the model gets something wrong?

How should we manage proposal conflicts when multiple agents present competing data for the same request?

How do we ensure transparency as AI models become increasingly complex and multi-modal?

Will “AI proposes, humans decide” become the standard pattern for all oracle systems? Or are there domains where full automation is viable?

UMA doesn’t pretend to have all the answers. But it’s building the system to ask the right questions, and it's doing so in public.

Conclusion: Finding Truth Onchain, Together

As AI continues to evolve, so do the threats it poses and the tools it offers. The same technology that floods the web with misinformation can also be used to fight it. The difference lies in how we design, govern, and challenge the systems that use it.

UMA’s OTB is a real, working example of what happens when we don’t let AI make decisions in isolation. LLMs are faster than humans, sure. But more importantly, it knows when to wait. It knows when to defer. It knows when to ask for help.

Combined with human oversight, AI can help us find the truth and make better decisions at scale. And in a world where truth is getting harder to find, that is exactly what we need.

Ready to be a part of the movement? Explore UMA.