UMA's AI Experiment: Can AI Agents Enhance the Optimistic Oracle?

TL;DR:

UMA has launched the Optimistic Truth Bot –an experimental agentic system that proposes answers to real-time questions in UMA’s Optimistic Oracle.

An AI agent proposer has the potential to boost efficiency while preserving human oversight, and it's already achieving upwards of 90% accuracy on real prediction markets.

In an era where misinformation spreads faster than facts, verifying what's true, especially onchain, is more critical than ever. That’s why UMA exists.

As blockchain oracles go, UMA has always been unique. While other oracles like Chainlink provide simple price feeds to plug into smart contracts, UMA focuses on complex, real-world data.

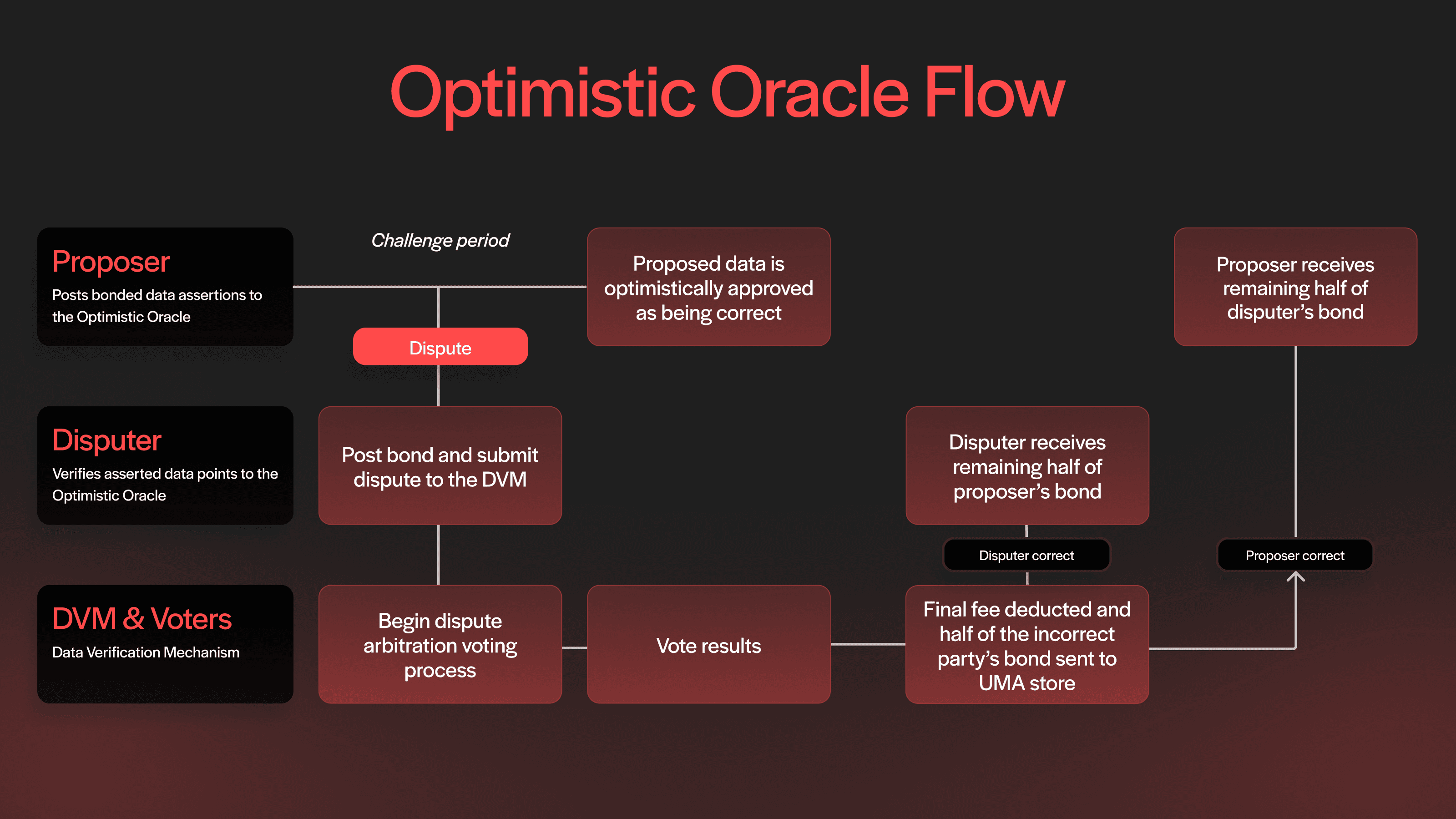

UMA’s Optimistic Oracle (OO) brings nuanced information onchain by letting anyone propose an answer to a question, and giving others the chance to dispute it. If no one disputes, the answer is accepted as true, which makes the system optimistic.

The OO is the only oracle that can handle natural language questions at scale, like “Did Romania’s court annul the election?” or “Was there a China-Vietnam trade deal before June?”. It doesn’t rely on APIs. It depends on people: a decentralized network of voters who review, propose, and verify nuanced claims.

This system notably enables prediction markets like Polymarket, but also works for DAO governance, IP disputes, smart contract audits, crosschain bridging, and many other use cases. Without UMA, they would have no way of verifying these claims at scale, in a decentralized and cost-efficient way.

Since launch, UMA’s OO has processed and resolved almost 60,000 assertions, securing nearly $40 billion in transaction volume, including some of the most high-stakes prediction markets in crypto.

But what if we could take the best of UMA’s human-powered oracle and make it faster, cheaper, and more scalable?

Unveiling UMA’s AI Proposer Experiment: The Optimistic Truth Bot

Today, we’re unveiling an AI experiment, the Optimistic Truth Bot, that’s been months in the making.

The Optimistic Truth Bot is a sophisticated AI system that monitors, processes, and recommends how prediction markets should resolve, primarily on Polymarket. Essentially, it acts as a human in UMA’s Optimistic Oracle, specifically to fulfil the proposer role, which involves proposing answers to data requests.

While we are still experimenting, the Optimistic Truth Bot is not yet making these proposals onchain. Right now, it is running parallel to human proposers and publishing its recommendations live on X. However, as its accuracy improves, the goal is to plug it directly into the oracle in the future.

This experiment isn’t our first attempt at integrating AI into UMA’s oracle system. Over the past two years, we’ve been running experiments with LLMs, roughly every six months, as new AI capabilities emerged. Each experiment had the same goal: exploring how well agentic systems could reliably work in UMA’s oracle operations. We saw incremental progress with each iteration, but the results weren’t compelling enough until now.

This is the first time we’ve been genuinely excited about the quality and reliability of LLM systems for participating in a prediction market oracle.

In the overall stack, proposers answer all the questions that come through the OO. Their answers are then publicly verified by another group of people, or <2% of the time, disputed and sent to a vote. They are the backbone of the system, responsible for understanding any given prediction market and recommending how it should resolve.

How the Optimistic Truth Bot Works

Router, Solvers, and Overseer: A 3-layer system

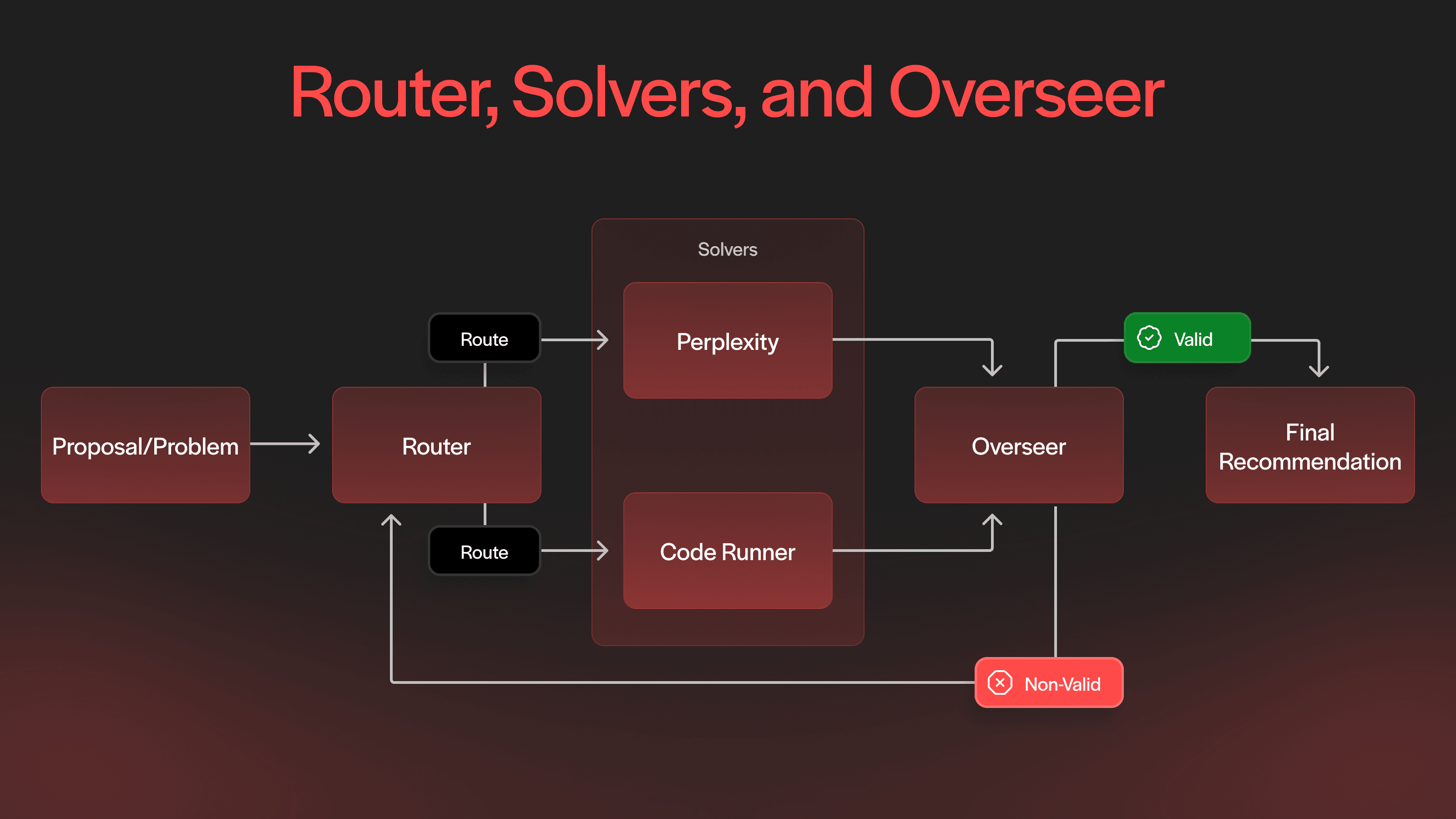

LLMs suffer from hallucination, confusing fact with fiction. To this end, the Optimistic Truth Bot leverages a modular agentic system to reduce the error rate. This is similar to the mixture of experts technique, wherein the overall AI system consists of specialized sub-modules (experts) to handle different parts of the request processing pipeline. In particular, the Optimistic Truth Bot contains three main levels within its agentic system:

Router: Receives the request to process a prediction market request and decides where to route it. The Router has context on all possible solvers, what they are good at, and their limitations. This knowledge empowers the router to choose the best solver for the given market.

Solvers: Solvers are tasked with finding the solution to the prediction market. The solver architecture is designed to be scalable and modular, where additional solvers can be added in the future as AI techniques improve over time. Solvers are designed to be specialized, running constrained and specific methods for solving the markets. Currently, there are two main kinds of solvers.

Perplexity Solver: Handles the generic and open-ended questions by searching the web and synthesizing information from multiple sources.

Code Runner Solver: Writes custom code to query APIs to solve prediction markets. For markets like Sport or crypto prices, it is better to go directly to the source (e.g., Query Binance) than to try to search the internet for the results.

Overseer: Acts as a quality control layer, validating answers from the solvers by checking against known patterns of hallucination, checking consistency against market pricing, and flagging edge cases. The overseer can restart the whole flow if it is unhappy with the result from the solver, re-prompting the router with additional information on what went wrong. For example, the overseer might not be happy with the quality of the solver's reply, such as Perplexity making reaching statements and inferences on the result of a market, rather than being fact-driven. The overseer will then prompt the router to tell the perplexity solver to be more fact-oriented. Additionally, the overseer can tell the router to consider using a multi-solver approach, corroborating the results between different techniques.

To see it in action, follow @OOTruthBot on X, where the Optimistic Truth Bot posts real-time recommendations on every proposal. Each tweet also links to a dashboard where you can view the prompt details and logs to understand its resolution process deeply.

Why We're Building This

So why build an AI proposer, especially if UMA has always been human-driven?

It’s simple.

UMA’s unique advantage lies in its permissionless and decentralized network of human voters who review, evaluate, and vote on complex intersubjective truths. These are the cases where a proposal is disputed (someone says it isn’t true) and the true answer requires human judgment and contextual understanding to resolve.

However, the preceding step of making a proposal, where someone makes a data claim, is often straightforward and could be made more efficient through AI. AI can improve the oracle by:

Reducing human error

Lowering costs

Avoiding premature proposals

Increasing proposer throughput

Letting human voters focus on tougher calls

The Optimistic Truth Bot uses strict guardrails and logic to analyze past events with verifiable outcomes. It also checks for time-sensitivity, ensuring it doesn’t propose answers too early, which is the cause of 80% of current disputes (Learn more about early proposals ‘P4').

This experiment advances our vision for the Next Gen OO, a more efficient and advanced version of the Optimistic Oracle. Essentially, the Optimistic Truth Bot could lead us to a future where AI proposes and humans decide, making UMA the only oracle with AI efficiency and human oversight. This isn't just a theoretical concept; the Optimistic Truth Bot is already analyzing real-world events. Over the last few months, we've been tracking its performance in live prediction markets against human voters. The initial results are promising, generating insights that could shape the future of how UMA verifies and records truth onchain.

The Results So Far

Performance Benchmarks

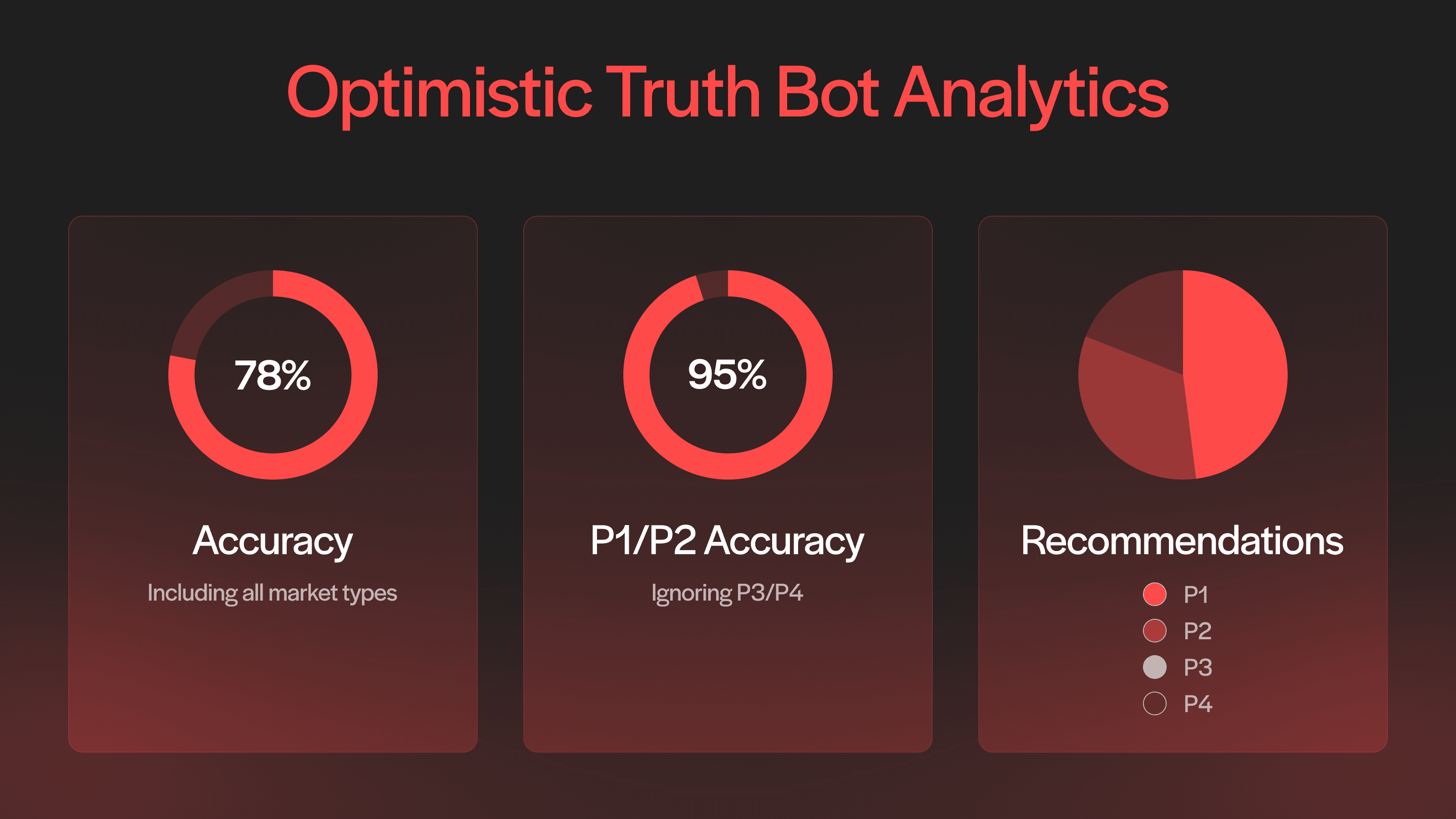

Early results have been exciting and informative. The Optimistic Truth Bot has processed over 3000 markets to date, and we’ve learnt a lot about how to improve the system in this time. The current running real-time accuracy of the Optimistic Truth Bot is ~78% across all market types.

To put this into context, human voters in UMA’s OO have a remarkable track record: 98.2% of assertions accepted without a dispute. When disputes occur, more than 80% of those disputes are about timing (P4 – “proposal too early”) rather than disagreements about the accuracy of the proposal. This means only 0.4% of all proposals face genuine challenges about their accuracy, and UMA voters always converge on the truth on prediction market requests requiring a DVM vote.

Now, while the results of the experiment isn’t at that human benchmark yet, what's particularly promising is when we look at markets resolved as No (P1) or Yes (P2), ignoring 50:50 splits (P3) or Too Early (P4) resolutions, the bot’s accuracy jumps to 95%. This suggests that the Optimistic Truth Bot is approaching the high accuracy standards that humans on UMA have set for clear, decidable outcomes.

The bot is designed to avoid making statements that it does not find totally defensible: we want to avoid false positives. This rigor explains the large discrepancy between the overall accuracy and the accuracy ignoring P4 results.

We're tracking performance across different categories, from crypto and sports to politics and entertainment, and it's clear that some types of markets are easier for the agentic system to resolve than others.

Markets like sports, asset prices, and simple factual questions prove the easiest for the bot to get right. This makes intuitive sense: straightforward questions with clear answers are easier to verify, and the code runner solver can achieve near-perfect results almost every time by simply calling an API. To date, the bot has achieved a 99.3% accuracy on sports and asset pricing markets.

More open-ended prediction market questions remain challenging. Complex geopolitical topics, for example, are difficult to verify consistently, especially when there is nuance in the market wording or ambiguity around what actually happened on the ground. Polymarket hosts a wide range of these markets, with varying degrees of difficulty for LLM-driven systems.

Take markets like “How many times will Trump say the word ‘steel mill’ at the Pittsburgh rally on May 30?”; these are intrinsically difficult for an LLM to solve. In this case, the perplexity solver must first locate information about the rally and find the associated livestream. Then, it needs to extract the transcript and count the number of times the word was said. This process can be challenging because finding the correct stream can be difficult, and the quality of transcripts is often poor. To date, the bot gets “mention markets” right 72% of the time, if we ignore P4s (markets that are resolved as “too early”).

The discrepancy between simple and complex markets highlights why we’ve chosen the multi-solver architecture here: as better techniques are created and LLM technology continues to improve, additional solvers can be added into the mix. The router and overseer logic will harden and improve, and in time, the accuracy of the overall agentic system will trend upwards.

Building the Next-Gen OO in Public

This experiment represents our commitment to building in public and sharing both successes and challenges transparently with our community. The Optimistic Truth Bot currently has an overall accuracy rate of 78%, and we’re inviting you to join us as we continue to push advancements and try to achieve human accuracy.

Think of this as an exploration into how AI complements rather than competes with humans, helping us understand the strengths, limitations, and emerging role of AI agents in onchain truth verification.

We'll continue sharing regular updates on our findings, wins, and learnings, and engaging with the community as it progresses.

The Future of Onchain Truth

This experiment sits at the intersection of two powerful trends: the rise of AI and the growing importance of verifiable truth in an era of misinformation. If AI will play a role in how the world verifies what’s true, that system needs human oversight, transparency, and accountability. UMA is building exactly that, and we’re doing it in the open.

Additionally, UMA isn't building a new unproven AI platform to tap into a trendy narrative; we’re plugging AI into existing technology with proven results. The Optimistic Oracle has real demand, real economic incentives for participation, and has decentralized human oversight, so the oracle is robust to participant errors. This is the perfect opportunity for AI to participate in truthmaking and help scale the oracle.

UMA is more than just infrastructure. It’s a protocol built on optimism; that truth can scale, that humans and machines can collaborate, and that the future of information doesn’t have to be chaos. The Optimistic Oracle is how we verify truth. The Optimistic Truth Bot is how we make that process faster, fairer, and future-proof.

Follow @OOTruthBot and @UMAprotocol.