The Economics of Truth: How Economic Incentives Create Reliable Oracles

TLDR

Most oracles focus on technical complexity to secure data, but UMA takes a different approach: it uses economic incentives to make honesty the most profitable strategy. By turning truth into a game where lying is expensive, UMA’s oracle has delivered 98.6% dispute-free resolutions and secured billions in value. This post explores how UMA’s model works, the research that supports it, and why incentives—paired with cryptography—may be the future of decentralized truth.

Introduction

What is the price of truth? For oracles, it’s exactly the cost of corruption.

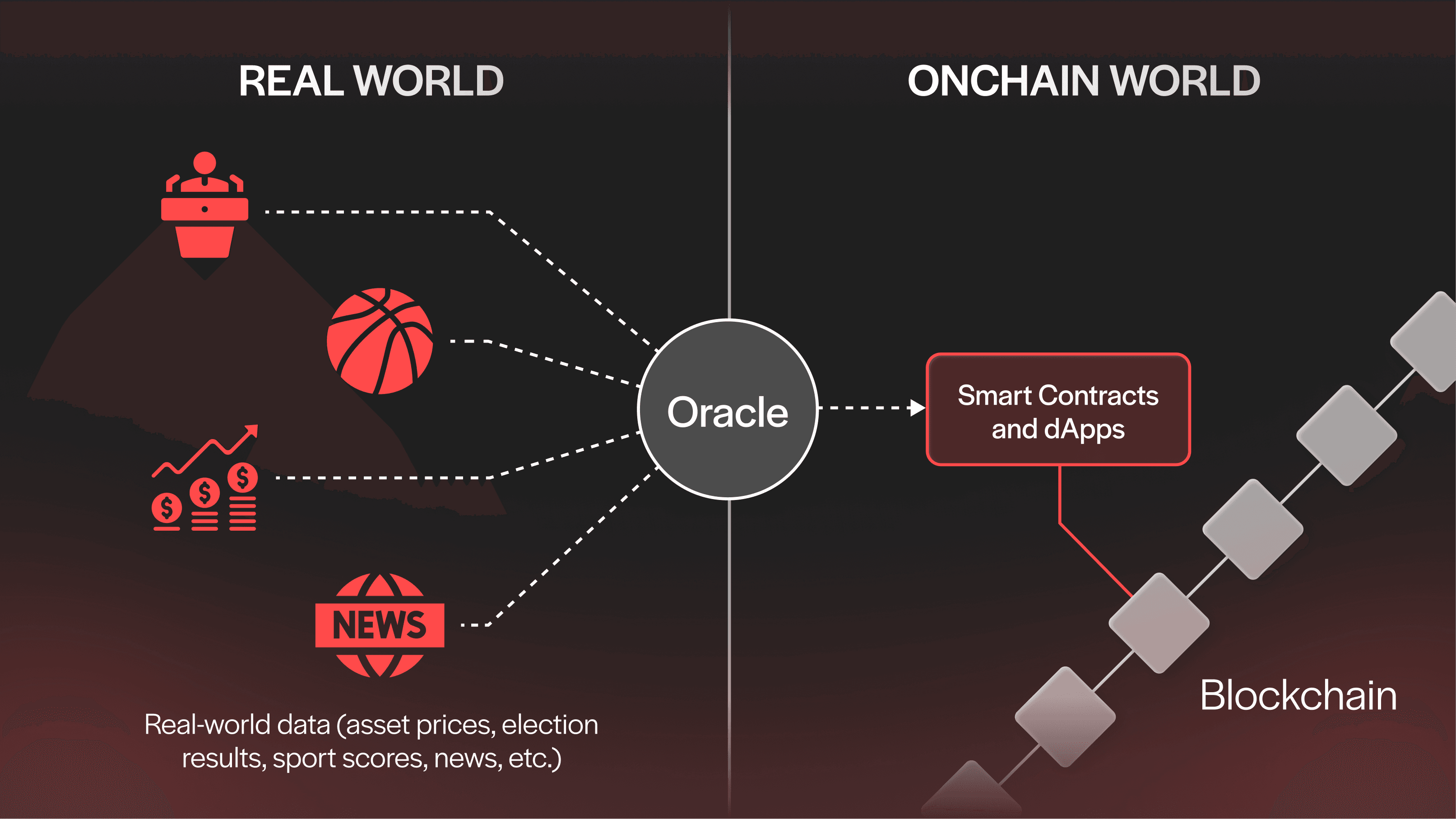

Oracles are the systems that feed real-world data to smart contracts. They are essential to every decentralized system, but they’re also one of the weakest links. If an oracle gets it wrong, everything built on top of it breaks, no matter how secure the smart contract is.

Most projects try to solve this with more cryptography, more complexity, or more validators. UMA takes a different approach: it creates a market for truth in a game-theoretic environment where being honest is the most profitable strategy. Instead of trying to eliminate all trust through increasingly complex cryptography, UMA assumes people can be trusted if the price is right.

In this piece, we’ll explore how UMA’s Optimistic Oracle (OO) flips the oracle problem on its head using a simple idea: when incentives are aligned, the truth wins. We’ll look at the research behind this model, the results it’s delivered in the real world, and why economic incentives may be the most reliable way to secure data onchain. Let’s dive in.

The Oracle Problem, Reimagined

Blockchains are powerful—but they’re blind.

They can verify onchain transactions with perfect precision, but they have no built-in way to access offchain information like asset prices, weather data, or election results. That’s where blockchain oracles come in.

Blockchain oracles act as bridges between offchain reality and onchain logic, feeding smart contracts the offchain data they need to function. Without oracles, DeFi protocols couldn’t liquidate loans, prediction markets couldn’t resolve outcomes, and automated systems couldn’t react to real-world events.

However, plugging offchain data into a blockchain introduces a new problem: what if the oracle is wrong? No matter how secure your smart contract is, if it receives bad data, it produces a bad outcome. And since oracles operate outside of the blockchain’s consensus layer, they’re inherently less secure.

The paper Reliability Analysis for Blockchain Oracles puts it bluntly: oracles are offchain components that “could be points of failure.” It argues that reducing oracle risk isn’t just a technical challenge, it’s an economic one.

At UMA, we agree. That’s why the OO doesn’t try to eliminate risk altogether. Instead, it introduces a simple economic premise: when lying costs more than it’s worth, the truth becomes inevitable.

The Economics of Truth Markets

At the heart of the OO is a concept called optimistic assertion. Anyone can submit data to the oracle (like “Bitcoin closed at $88,000 yesterday”) and back the claim with a bond. If no one disputes the assertion, it’s considered true. But if someone challenges it, the system escalates to a decentralized vote where voters are financially rewarded for voting truthfully.

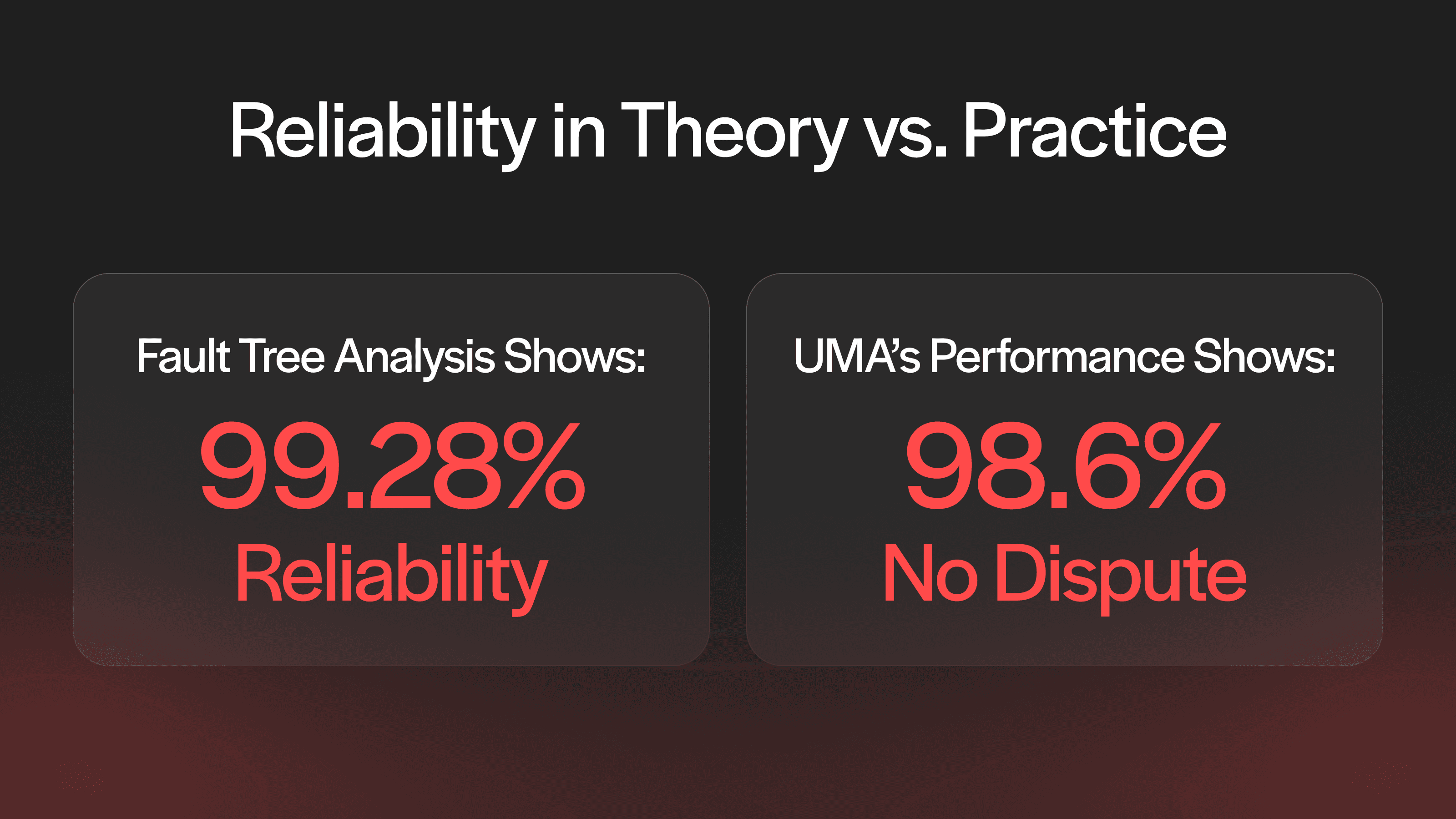

This system turns truth into an open market where honesty is profitable and dishonesty is expensive. It’s a model backed by multiple layers of research, including fault tree analysis, which reveals that oracles using well-structured economic incentives can achieve 99.28% reliability.

UMA’s internal metrics reflect a very similar performance: 98.6% of requests are settled without dispute.

What does this tell us? That honesty is statistically the most profitable strategy.

Other researchers arrived at a similar conclusion in the paper, Infochain: A Decentralized, Trustless and Transparent Oracle. The research introduces a peer-consistency incentive mechanism, where each participant is rewarded based on how closely their answer matches the majority. This approach makes lying economically irrational—just like UMA’s model. When rewards are tied to consensus-aligned answers, truth becomes the best-paying option. It’s a different implementation, but the same principle: markets for truth work when the incentives are properly aligned.

In both cases, the design philosophy is the same: build a system where deviating from the truth becomes economically irrational.

Designing Incentives that Actually Work

UMA’s design works because it effectively aligns incentives for everyone involved. Participants have skin in the game at every level:

To make a data assertion, you must provide a bond. If you’re deemed correct, you earn a reward. If you’re deemed incorrect, you lose your bond.

To dispute a proposal/claim/assertion, you must match the proposer’s bond. If you’re deemed correct, you earn a reward. If you’re deemed incorrect, you lose your bond.

To vote on disputes, you must stake capital. You are rewarded when you vote accurately and slashed when you vote inaccurately.

Stakers earn yield (currently ~21% APR) in exchange for voting on oracle disputes, but they also risk slashing penalties if they vote dishonestly. The voting system uses a commit-reveal voting scheme to ensure participants can’t see others’ votes before submitting their own, which prevents manipulation or coordination.

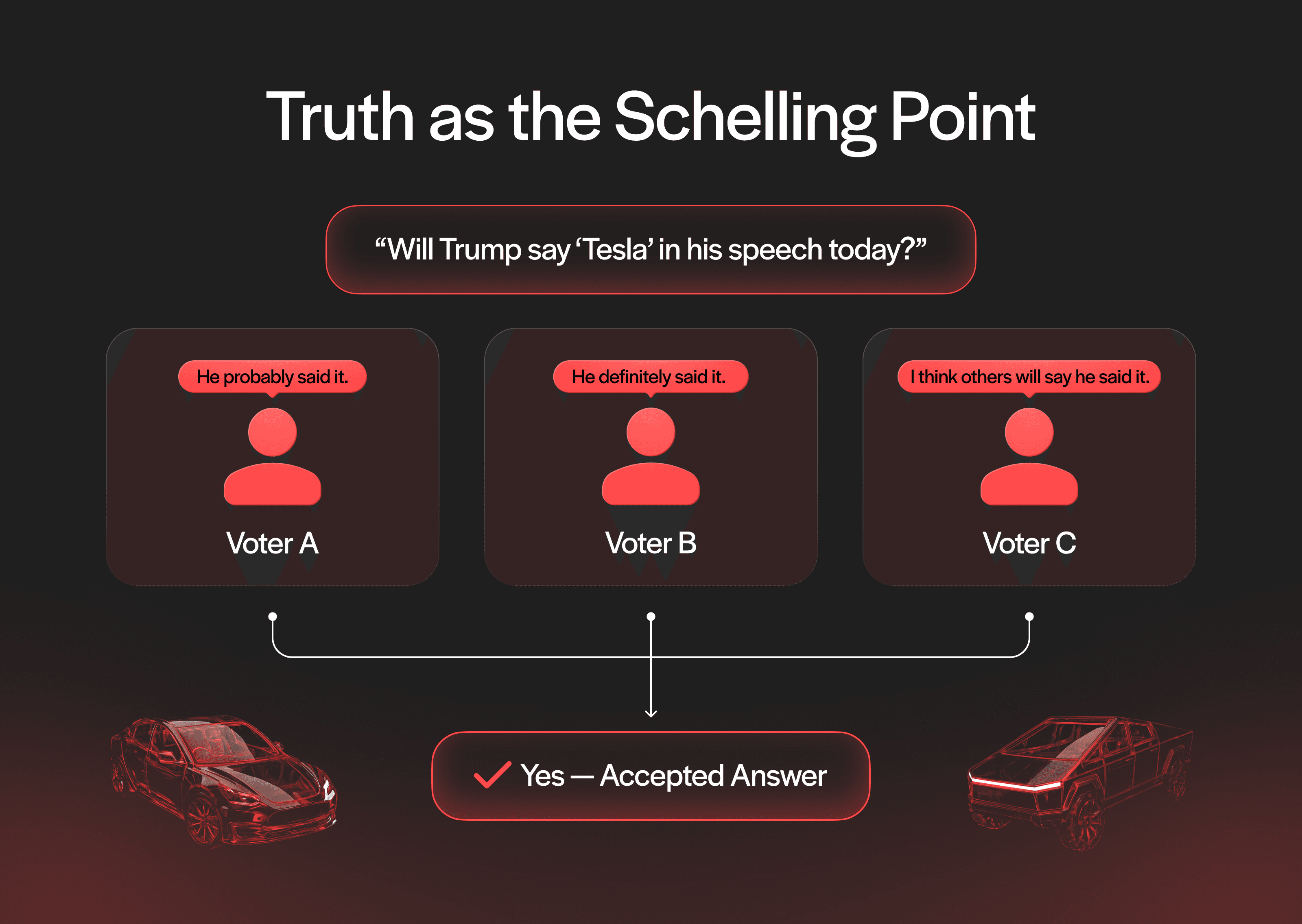

UMA’s oracle relies on a Schelling Point mechanism, which is a concept from game theory where people, without communicating with each other, tend to converge on the most obvious or natural outcome—because they assume others will do the same. In UMA’s case, that outcome is the truth. Rational stakers are incentivized to vote honestly not just because it’s right, but because they expect others to do the same—and matching the majority is how they get paid.

This dynamic turns truth into the equilibrium strategy. As long as participants believe others will vote truthfully, voting for anything else becomes economically irrational. That’s the core of UMA’s design: make honesty the most profitable move in the game.

This model works not just because it punishes dishonesty, but because it plugs validators (voters) into a recurring system of value. Another research piece, Reputation as Contextual Knowledge: Incentives and External Value in Truthful Blockchain Oracles, notes that oracle designs are most reliable when incentives aren’t siloed, but connected to broader networks where participation is ongoing and reputation carries weight. UMA’s design already leans in that direction, rewarding honest behavior over time, not just in one-off cases.

This isn’t merely a theory. Fault analysis research also highlights how scaling up the number of validators and aligning their incentives can reduce failure probabilities even further. UMA’s model puts this into practice, creating a dynamic where economic pressure acts as both carrot and stick.

While UMA doesn’t yet implement reputation as a standalone feature, the philosophical alignment is clear: the best oracles are designed to reward honesty in context.

From Research to Real-World Results

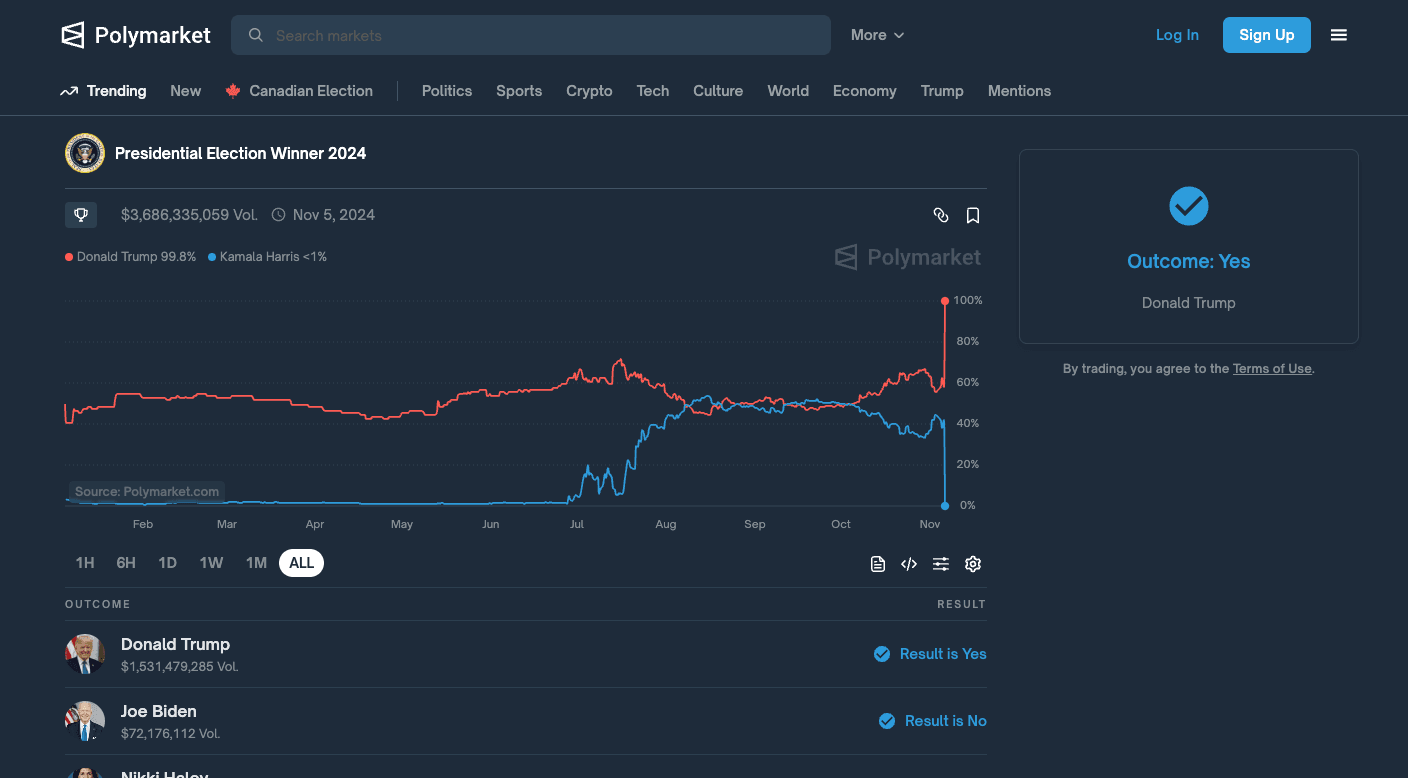

UMA’s OO isn’t just theoretical—it’s battle-tested. The 2024 U.S. Presidential Election market on Polymarket was the largest political prediction market in history. UMA successfully settled this market with no dispute, securing over $3.6 billion in open interest. With thousands of data points, this event proved that a decentralized economic model can scale to real-world, high-stakes environments.

UMA’s open participation model means anyone with capital can become a validator (voter) and resolve disputes. This democratization of truth verification is a massive step forward from centralized feeds or fixed validator sets.

Researchers argue that decentralized oracle systems, when guided by the right incentives, are more reliable and resistant to failure than centralized alternatives. UMA’s performance in real-world, high-stakes environments—like securing billions in prediction markets with a 98.6% dispute-free resolution rate—proves that point in practice. In other words, UMA is real-world validation that economic incentives can reliably govern truth at scale.

The Future of Truth: Open, Aligned, and Economic

UMA’s oracle model doesn’t rely on a fixed committee or a privileged set of data providers. Instead, it’s open by design. Anyone with capital and conviction can participate in validating truth by asserting data, disputing incorrect claims, or staking UMA tokens to vote. This open participation model turns the oracle into a marketplace for truth, one where economic skin in the game keeps everyone honest.

The research supports this approach, revealing that decentralized oracle systems, when properly incentivized, outperform centralized ones in both reliability and resilience. In UMA’s system, the same dynamic plays out: when the cost of corruption outweighs the potential reward, lying simply doesn’t pay.

Over time, this model makes UMA’s oracle more secure and more trustworthy. By creating financial incentives that reward alignment with reality, UMA has laid the foundation for more reliable prediction markets, more secure DeFi protocols, and a future where trustless data is truly decentralized.

As Web3 evolves into InfoFi and beyond, the need for scalable, permissionless truth verification will only grow. UMA’s economic design isn’t just a solution, it’s a signal that in the world of oracles, incentives are infrastructure.